Using Yambo in parallel: Difference between revisions

| Line 84: | Line 84: | ||

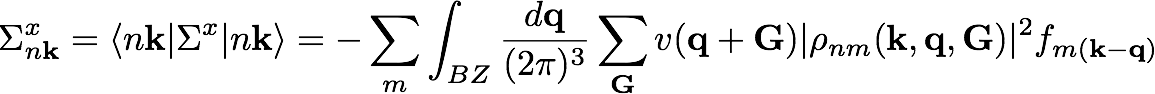

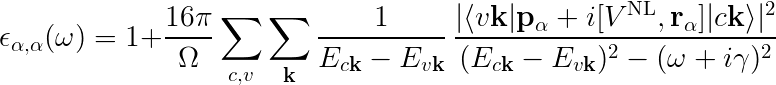

Concerning parallelism, let us focus at first on a q-resolved quantity like the IP optical response: | Concerning parallelism, let us focus at first on a q-resolved quantity like the IP optical response: | ||

[[File:CH1.png|none| | [[File:CH1.png|none|x60px|Yambo tutorial image]] | ||

* Here, in order to obtain the dielectric function, either in the optical limit (q->0) or at finite q, one basically needs to sum over valence-conduction transitions (v,c) for each k point in the BZ. The calculation can be made easily parallel over these indexes. | |||

* This is done at the '''MPI level''' (distributing both memory and computation), and also by '''OpenMP threads''', just distributing the computational load. | |||

* In this very specific case, OpenMP also requires some extra memory workspace (i.e. by increasing the number of OMP threads, the memory usage will also increase). | |||

==GW in parallel== | ==GW in parallel== | ||

Revision as of 10:42, 13 April 2017

This module presents examples of how to run Yambo in a parallel environment.

Prerequisites

Previous modules

- Initialization for bulk hBN.

You will need:

- The

SAVEdatabases for bulk hBN (Download here) - The

yamboexecutable

Yambo Parallelism in a nutshell

Yambo implements a hybrid MPI+OpenMP parallel scheme, suited to run on large partitions of HPC machines (as of Apr 2017, runs over several tens of thousands of cores, with a computational power > 1 PFl/s, have been achieved).

- MPI is particularly suited to distribute both memory and computation, and has a very efficient implementation in Yambo, which needs very few communications though may be prone to load unbalance (see parallel_tutorial).

- OpenMP instead works within a shared memory paradigm, meaning that multiple threads perform computation in parallel on the same data in memory (no memory replica, at least in principle)

- Concerning Yambo, tend to use MPI parallelism as much as possible (i.e. as much as memory allows), then resort to OpenMP parallelism in order not to increase memory usage any further while exploiting more cores and computing power.

- The number of MPI tasks and OpenMP threads per task can be controlled in a standard way, e.g. as

$ export OMP_NUM_THREADS=4 $ mpirun -np 12 yambo -F yambo.in -J label

resulting in a run exploiting up to 48 threads (best fit on 48 physical cores, though hyper-threading, i.e. more threads than cores, can be exploited to feed computing units at best)

- A fine tuning of the parallel structure of Yambo (both MPI and OpenMP) can be obtained by operating on specific input variables (run level dependent), which can be activated, during input generation, via the flag

$ -V par

(verbosity high on parallel variables).

- Yambo can take advantage of parallel dense linear algebra (e.g. via [ScaLAPACK], SLK in the following). Control is provided by input variables

(see e.g. RPA response in parallel)

- When running in parallel, one report file is written, while multiple log files are dumped (one per MPI task, by default), and stored in a newly created

./LOGfolder. When running with thousands of MPI tasks, the number of log files can be reduced by setting, in the input file, something like:

NLogCPUs = 4 # [PARALLEL] Live-timing CPU`s (0 for all)

In the following we give direct examples of parallel setup for some among the most standard Yambo kernels.

HF run in parallel

Full theory and instructions about how to run HF (or, better, exchange self-energy) calculations are given in Hartree Fock.

Concerning parallelism, the quantities to be computes are:

Basically, for every orbital nk we want to compute the exchange contribution to the qp correction. In order to to this, we need to perform a sum over q vectors and a sum over occupied bands (to build the density matrix).

- Generate the input file asking for parallel verbosity:

$ yambo -x -V par -F hf.in

- By default, OpenMP acts on spatial degrees of freedom (both direct and reciprocal space) and takes care of FFTs. Do not forget to set the

OMP_NUM_THREADSvariable (to 1 to avoid OpenMP parallelism)

$ export OMP_NUM_THREADS=1 or $ export OMP_NUM_THREADS= <integer_larger_than_one>

- By default, the MPI parallelism will distribute both computation and memory over the bands in the inner sum (b). Without editing the input file, simply run:

$ mpirun -np 4 yambo -F hf.in -J <run_label>

- Alternatively, MPI parallelism can work over three different levels

q,qp,bat the same time:

q parallelism over transferred momenta (q in Eq. above) qp parallelism over qp corrections to be computed (nk in Eq.) b parallelism over (occupied) density matrix (or Green's function) bands (m in Eq.)

Taking the case of hBN, in order to exploit this parallelism over 8 MPI tasks, set e.g.:

SE_CPU= " 1 2 4" # [PARALLEL] CPUs for each role SE_ROLEs= "q qp b" # [PARALLEL] CPUs roles (q,qp,b)

Then run as

mpirun -np 8 yambo -F hf.in -J run_mpi8_omp1

Note that the product of the numbers in SE_CPU needs to be equal to the total number of MPI tasks, otherwise yambo will switch back to default parallelism (a warning is provided in the log).

Having a look at the report file, r-run_mpi8_omp1_HF_and_locXC here, one finds:

[01] CPU structure, Files & I/O Directories ===========================================

* CPU-Threads :8(CPU)-1(threads)-1(threads@SE) * CPU-Threads :SE(environment)- 1 2 4(CPUs)-q qp b(ROLEs) * MPI CPU : 8 * THREADS (max): 1 * THREADS TOT(max): 8

Now we can inspect the files created in the ./LOG directory, e.g. ./LOG/l-run_mpi8_omp1_HF_and_locXC_CPU_1, where we find:

<---> P0001: [01] CPU structure, Files & I/O Directories <---> P0001: CPU-Threads:8(CPU)-1(threads)-1(threads@SE) <---> P0001: CPU-Threads:SE(environment)- 1 2 4(CPUs)-q qp b(ROLEs) ... <---> P0001: [PARALLEL Self_Energy for QPs on 2 CPU] Loaded/Total (Percentual):70/140(50%) <---> P0001: [PARALLEL Self_Energy for Q(ibz) on 1 CPU] Loaded/Total (Percentual):14/14(100%) <---> P0001: [PARALLEL Self_Energy for G bands on 4 CPU] Loaded/Total (Percentual):3/10(30%) <---> P0001: [PARALLEL distribution for Wave-Function states] Loaded/Total(Percentual):84/140(60%)

providing the details of memory and computation distributions for the different levels. This report is from processor 1 (as highlighted about _CPU_1 suffix). Similar pieces of information are provided for all CPUs. If different CPUs show very different distribution levels, it is likely that load unbalance occurs (e.g., try with 6 MPI tasks parallel over bands).

For Yambo version <= 4.1.2, Yambo may not be able to find a proper default parallel structure (try e.g. the above example asking for 20 MPI tasks, while you only have 8 bands to parallelise over). In These cases the calculation crashes and an error message is provided in the report file:

[ERROR]Impossible to define an appropriate parallel structure

In these cases it is then mandatory to specify a proper parallel structure. This has been mostly overcome by Yambo v. 4.2 on.

RPA response in parallel

Full theory and instructions about how to run independent particle (IP) or RPA linear response calculations are given e.g. in Optics at the independent particle level or Dynamic screening (PPA).

Concerning parallelism, let us focus at first on a q-resolved quantity like the IP optical response:

- Here, in order to obtain the dielectric function, either in the optical limit (q->0) or at finite q, one basically needs to sum over valence-conduction transitions (v,c) for each k point in the BZ. The calculation can be made easily parallel over these indexes.

- This is done at the MPI level (distributing both memory and computation), and also by OpenMP threads, just distributing the computational load.

- In this very specific case, OpenMP also requires some extra memory workspace (i.e. by increasing the number of OMP threads, the memory usage will also increase).